The Hyve has been advocating for the adoption of FAIR principles since the very beginning and recently we have indeed been noticing a focus shift towards sustainability. FAIR enables powerful new AI analytics to access data for machine learning and prediction. FAIR is a fundamental enabler for digital transformation of biopharma R&D and this is what excites me about our work. All the conversations we have with our clients share an important element: the strong will to improve and speed up medical and pharmaceutical research to save lives and make personalised medicine and affordable drugs the norm.

The FAIR principles describe how research output should be organised so it can be more easily accessed, understood, exchanged and reused. In times of pandemic, working hard with many of the top pharma companies wanting to implement those guidelines gives me hope and a higher purpose to my work.

Our 4-year journey with the FAIR principles at The Hyve has allowed us to find the answer to questions like: Why are FAIR principles important when planning a data strategy? How do they help an organization using a multitude of tools and systems? How can FAIR guidelines accelerate discovery pipelines? Why should I FAIRify legacy data? How can external data from CRO’s or other data partners be natively FAIR?

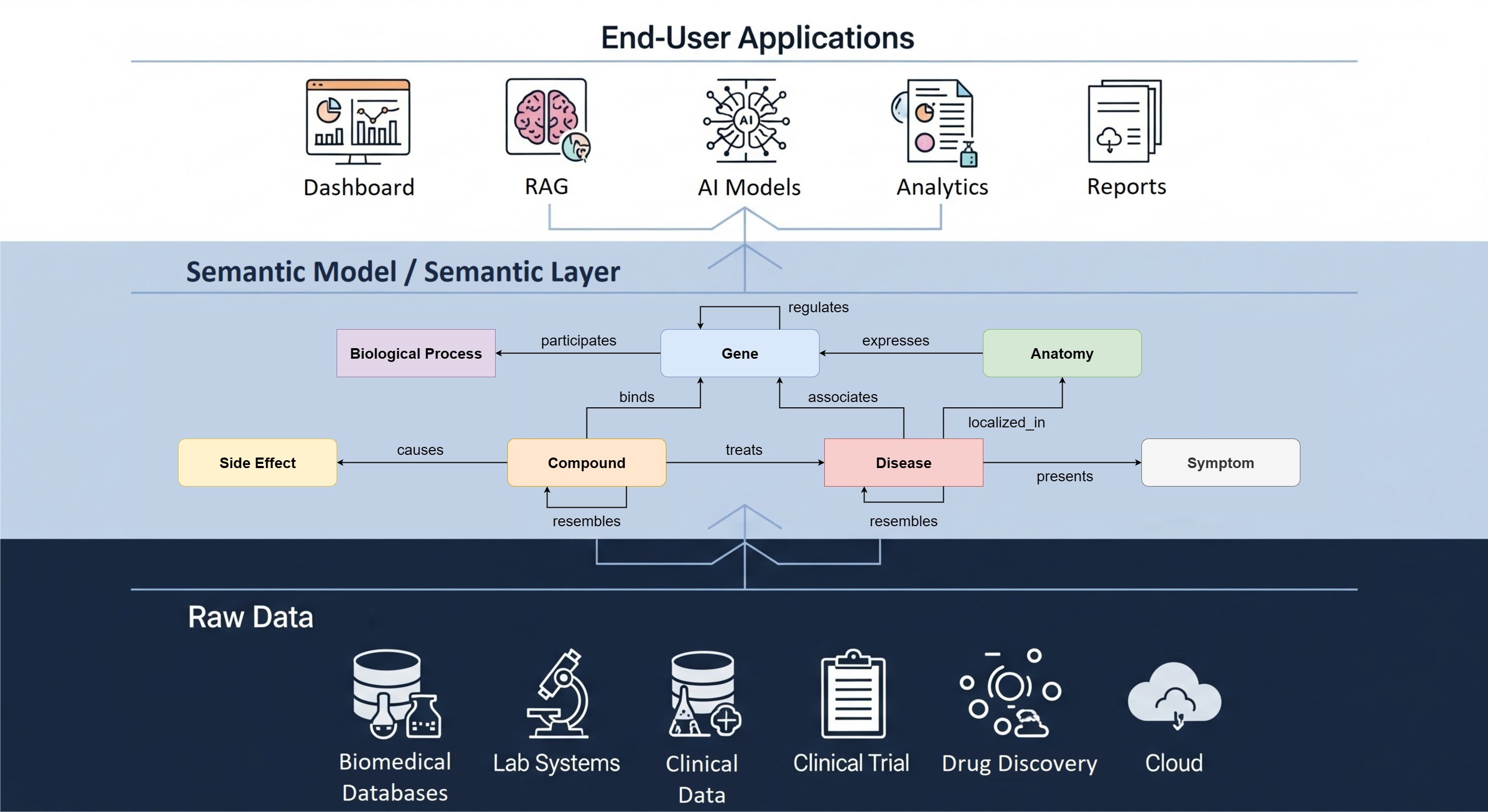

The technical solution in all these cases is the same: a cross-domain, semantically interoperable data layer, built with the FAIR principles in mind. Some of you may find it difficult to stay up to date with all the new technology trends and wonder how to discern hype from fact. Don’t worry, The Hyve is there to help you out. Our colleagues always like to try new things out, together with other pioneers in the field, and share insights with the larger biomedical research community.

When I started my career in IT, my solutions sales was focused on helping large corporations migrating from a monolithic architecture to cloud-native solutions. Data mesh is now the new black in information architecture, and it is why at The Hyve we center our advice on metadata-driven approaches and distributed solutions that thrive in a culture that is based on collaboration.

Semantic interoperability enables systems to derive meaning from data in a contextual manner: the correct implementation of each layer contributes to an interoperability waterfall, which boosts the value of your data. The path is paved with intermediary steps that require some time to be implemented. It’s in these intermediary steps that The Hyve specializes, with our diversified set of skills and technologies. Our consultants strive to facilitate the use of knowledge graphs to visualize data lakes, harmonize assets and interconnect them with leading systems and external resources. Ideally, the work starts with exploring the data landscape: the representation of an organization’s data assets, storage options, systems for creating, analysing, processing and storing data, and other applications present in the enterprise’s data environment. You then define provenance policies, getting the plumbing right around URI’s, agreeing on semantics and metadata conventions. Last but not least, you move into conforming vocabularies and ontologies to public standards.

FAIR principles can help every step of the way when building an interoperable data layer. It is important that the rules regarding the creation and maintenance of this layer are crystal clear and meet the needs of a broad set of users (data stewards, tool builders, business and end-consumers of data). A FAIR Big data fabric provides a comprehensive view of business data and orchestrates data sources automatically, preparing them for processing and analysis.

FAIR assessment

But quickly the question arises: Where to start? How to check that you are on the right track? Here, a FAIR assessment comes in handy: a principled approach to determine the FAIRness of specific digital assets, such as datasets, software applications, and/or standards. It can also be used to evaluate the maturity of (a part of) an organization in the adoption of the FAIR principles. Think of the assessment as a health check-up. This evaluation can be embedded in the operational routine of, for example, a Data Office and/or CIO, to provide a snapshot of the impact of FAIR at a specific point in time. The assessment showcases the results and all possible constraints in a language that speaks to C-level, IT and bioinformatics staff alike. Such insights will facilitate discussion and nurture the culture of collaboration needed to plan for any additional FAIRification operations (implementation of FAIR).

We understand that the FAIR journey has many twists and turns. It requires an answer to questions like: What is the business problem we are trying to solve with FAIR? What questions does our FAIR data need to answer? Is FAIR an alternative process to something we are already doing?

One thing is certain: During a FAIRification process you will learn a lot about your data. Too much data, too little, too diverse… don’t despair. As Andrew McAfee once said: “The world is one big data problem.”

The Hyve’s consultants can help you get started with a FAIR assessment and develop a FAIR blueprint for your data. Book a free consultation with one of our experts to get started or follow-up with FAIR in your company.